Chapter 3: The mind-body problem

The mind-body problem

Matthew Van Cleave

Introduction: A pathway through this chapter

What is the relationship between the mind and the body? In contemporary philosophy of mind, there are a myriad of different, nuanced accounts of this relationship. Nonetheless, these accounts can be seen as falling into two broad categories: dualism and physicalism.[1] According to dualism, the mind cannot be reduced to a merely physical thing, such as the brain. The mind is a wholly different kind of thing than physical objects. One simple way a dualist might try to make this point is the following: although we can observe your brain (via all kinds of methods of modern neuroscience), we cannot observe your mind. Your mind seems inaccessible to third-person observation (that is, to people other than you) in a way that your brain isn’t. Although neuroscientists could observe activation patterns in your brain via functional magnetic resonance imagining, they could not observe your thoughts. Your thoughts seem to be accessible only in the first person—only you can know what you are thinking or feeling directly. Insofar as other can know this, they can only know it indirectly, though your behaviors (including what you say and how you act). Readers of previous chapters will recognize that dualism is the view held by the 17th century philosopher, René Descartes, and that I have referred to in earlier chapters as the Cartesian view of mind. In contrast with dualism, physicalism is the view that the mind is not a separate, wholly different kind of thing from the rest of the physical world. The mind is constituted by physical things. For many physicalists, the mind just is the brain. We may not yet understand how mind/brain works, but the spirit of physicalism is often motivated by something like Ockham’s razor: the principle that all other things being equal, the simplest explanation is the best explanation. Physicalists think that all mind related phenomena can be explained in terms of the functioning of the brain. So a theory that posits both the brain and another sui generis entity (a nonphysical mind or mental properties) violates Ockham’s razor: it posits two kinds of entities (brains and minds) whereas all that is needed to explain the relevant phenomena is one (brains).

The mind-body problem is best thought of not as a single problem but as a set of problems that attach to different views of the mind. For physicalists, the mind-body problem is the problem of explaining how conscious experience can be nothing other than a brain activity—what has been called “the hard problem.” For dualists, the mind-body problem manifests itself as “the interaction problem”—the problem of explaining how nonphysical mental phenomena relate to or interact with physical phenomena, such as brain processes. Thus, the mind-body problem is that no matter which view of the mind you take, there are deep philosophical problems. The mind, no matter how we conceptualize it, seems to be shrouded in mystery. That is the mind-body problem. Below we will explore different strands of the mind-body problem, with an emphasis on physicalist attempts to explain the mind. In an era of neuroscience, it seems increasingly plausible that the mind is in some sense identical to the brain. But there are two putative properties of minds—especially human minds—that appear to be recalcitrant to physicalist explanations. The two properties of minds that we will focus on in this chapter are “original intentionality” (the mind’s ability to have meaningful thoughts) and “qualia” (the qualitative aspects of our conscious experiences).

We noted above the potential use of Ockham’s razor as an argument in favor of physicalism. However, this simplicity argument works only if physicalism can explain all of the relevant properties of the mind. A common tactic of the dualist is to argue that physicalism cannot explain all of the important aspects of the mind. We can view several of the famous arguments we will explore in this chapter—the “Chinese room” argument, Nagel’s “what is it like to be a bat” argument, and Jackson’s “knowledge argument”—as manifestations of this tactic. If the physicalist cannot explain aspects of the mind like “original intentionality” and “qualia” then the simplicity argument fails. In contrast, a tactic of physicalists is to either try to meet this explanatory challenge or to deny that these properties ultimately exist. This latter tactic can be clearly seen in Daniel Dennett’s responses to these challenges to physicalism since he denies that original intentionality and qualia ultimately exist. This kind of eliminativist strategy, if successful, would keep in place Ockham simplicity argument.

Representation and the mind

One aspect of mind that needs explaining is how the mind is able to represent things. Consider the fact that I can think about all kinds of different things— about this textbook I am trying to write, about how I would like some Indian food for lunch, about my dog Charlie, about how I wish I were running in the mountains right now. Medieval philosophers referred to the mind as having intentionality—the curious property of “aboutness”—that is, the property of an object to be able to be about some other object. In a certain sense, the mind seems to function kind of like a mirror does—it reflects things other than itself. But unlike a mirror, whose reflected images are not inherently meaningful, minds seem to have what contemporary philosopher John Searle calls “original intentionality.” In contrast, the mirror has only “derived intentionality”—its image is meaningful only because something else gives it meaning or sees it as meaningful. Another thing that has derived intentionality is words, for example the word “tree.” “Tree” refers to trees, of course, but it is not as if the physical marks on a page inherently refer to trees. Rather, human beings who speak English use the word “tree” to refer to trees. Spanish speakers use the word “arbol” to refer to trees. But in neither case do those physical marks on the page (or sound waves in the air, in the case of spoken words) inherently mean anything. Rather, those physical phenomena are only meaningful because a human mind is representing those physical phenomena as meaningful. Thus, words are only meaningful because a human mind represents them in a meaningful way. Although we speak of the word itself as carrying meaning, this meaning has only derived intentionality. In contrast, the human mind has original intentionality because only the mind is the ultimate creator of meaningful representations. We can explain the meaningfulness of words in terms of thoughts, but then how do we explain the meaningfulness of the thoughts themselves? This is what philosophers are trying to explain when they investigate the representational aspect of mind.

There are many different attempts to explain what mental representation is but we will only cursorily consider some fairly rudimentary ideas as a way of building up to a famous thought experiment that challenges a whole range of physicalist accounts of mental representation. Let’s start with a fairly simple, straightforward idea—that of mental images. Perhaps what my mind does when it represents my dog Charlie is that it creates a mental image of Charlie. This account seems to fit our first person experience, at least in certain cases, since many people would describe their thoughts in terms of images in their mind. But whatever a mental image is, it cannot be like a physical image because physical images require interpretation in terms of something else. When I’m representing my dog Charlie it can’t be that my thoughts about Charlie just are some kind of image or picture of Charlie in my head because that picture would require a mind to interpret it! But if the image is suppose to represent the thing that has “original intentionality,” then if our explanation requires some other thing that has that has original intentionality in order to interpret it, then the mental image isn’t really the thing that has original intentionality. Rather, the thing interpreting the image would have original intentionality. There’s a potential problem that looms here and threatens to drive the mental image view of mental representation into incoherence: the object in the world is represented by a mental image but that mental image itself requires interpretation in terms of something else. It would be problematic for the mental image proponent to then say that there is some other inner “understander” that interprets the mental image. For how does this inner understander understand? By virtue of another mental image in its “head”? Such a view would create what philosophers call an infinite regress: a series of explanations that require further explanations, thus, ultimately explaining nothing. The philosopher Daniel Dennett sees explanations of this sort as committing what he calls “the homuncular fallacy,” after the Latin term, homunculus, which means “little man.” The problem is that if we explain the nature of the mind by, in essence, positing another inner mind, then we haven’t really explained anything. For that inner mind itself needs to be explained. It should be obvious why positing a further inner mind inside the first inner mind enters us into an infinite regress and why this is fatal to any successful explanation of the phenomenon in question—mental representation or intentionality.

Within the cognitive sciences, one popular way of understanding the nature of human thought is to see the mind as something like a computer. A computer is a device that takes certain inputs (representations) and transforms those inputs in accordance with certain rules (the program) and then produces a certain output (behavior). The idea is that the computer metaphor gives us a satisfying way of explaining what human thought and reasoning is and does so in a way that is compatible with physicalism. The idea, popular in philosophy and cognitive science since the 1970s, is that there is a kind of language of thought which brain states instantiate and which is similar to a natural language in that it possesses both a grammar and a semantics, except that the representations in the language of thought have original intentionality, whereas the representations in natural languages (like English and Spanish) have only derived intentionality. One central question in the philosophy of mind concerns how these “words” in the language of thought get their meaning? We have seen above that these representations can’t just be mental images and there’s a further reason why mental images don’t work for the computer metaphor of the mind: mental images don’t have syntax like language does. You can’t create meaningful sentences by putting together a series of pictures because there are no rules for how those pictures create a holistic meaning out of the parts. For example, how could pictures represent the thought, Leslie wants to go out in the rain but not without an umbrella with a picture (or pictures)? How do I represent with a picture someone’s desire? Or how do I represent the negation of something with only a picture? True, there are devices that we can use within pictures, such as the “no” symbol on no smoking signs. But those symbols are already not functioning purely as pictorial representations that seem to represent in virtue of their similarity. There is no pictorial similarity between the purely logical notion “not” and any picture we could draw. So whatever the words of the language of thought (that is, mental representations) are, their meaning cannot derive from a pictorial similarity to what they represent. So we need some other account. Philosophers have given many such accounts, but most of those accounts attempt to understand mental representation in terms of a causal relationship between objects in the world and representations. That is, whatever types of objects cause (or would cause) certain brain states to “light up,” so to speak, are what those brain states represent. So if there’s a particular brain state that lights up any time I see (or think about) a dog, then that is what those mental representations stand for. Delving into the nuances of contemporary theories of representation is beyond the scope of this chapter, but the important point is that the language of thought idea that these theories support is supposed to be compatible with physicalism as well as the computer analogy of explaining the mind. On this account, the “words” of the language of thought have original intentionality and thinking is just the manipulation of these “words” using certain syntactic rules (the “program”) that are hard-wired into the brain (either innately or by learning) and which are akin to the grammar of a natural language.

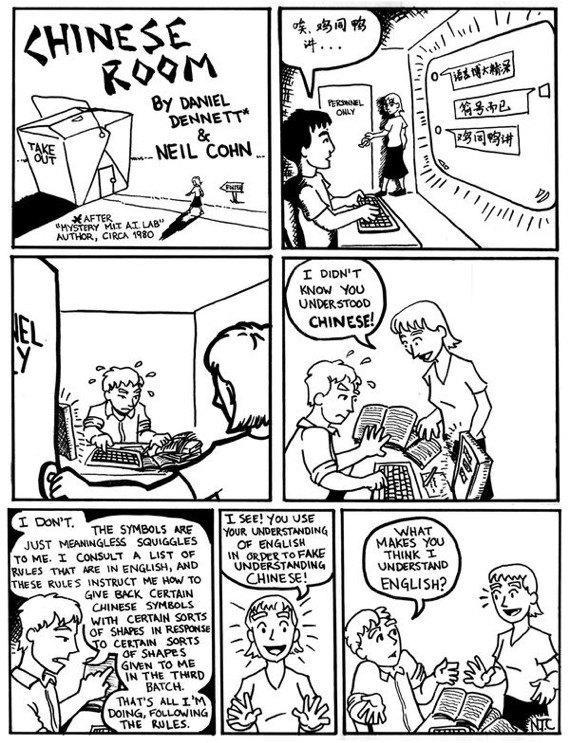

There is a famous objection to the computer analogy of human thought that comes from the philosopher John Searle, who thinks that it shows that human thought and understanding cannot be reduced to the kind of thing that a computer can do. Searle’s thought experiment is called the Chinese Room. Imagine that there is a room with a man inside of it. What the man does is take slips of paper that are passed into the room via a slit. The slips of paper have writing on them that look like this:

![]()

The room also contains a giant bookshelf with many different volumes of books. Those books are labeled something like this:

Volume 23: Patterns that begin with ![]()

When the man sees the slip of paper with the characters ![]() he goes to the bookshelf and pulls out volume 23 and looks for this exact pattern. When he finds it, he looks up the exact pattern and finds an entry that looks something like this:

he goes to the bookshelf and pulls out volume 23 and looks for this exact pattern. When he finds it, he looks up the exact pattern and finds an entry that looks something like this:

When you see ![]() write

write ![]()

The man writes the symbols and then passes it back through the slit in the wall. From the perspective of the man in the room, this is what he does. Nothing more nothing less. The man inside the room doesn’t understand what these symbols mean; they are just meaningless squiggles on a page to him. He sees the difference between the different symbols merely in terms of their shapes. However, from outside the room Chinese speakers who are writing questions on the slips of paper and passing them through the slot in the room come to believe that the Chinese room (or something inside it) understands Chinese and is thus intelligent.

The Chinese room is essentially a scenario in which a computer program passes the Turing Test. In paper published in 1950, Alan Turing proposed a test for how we should determine whether or not a machine can think. Basically, the test is whether or not the machine can make a human investigator believe that the machine is a human. The human investigator is able to ask the machine any questions they can think of (which Turing imagined would be conducted via types responses on a keyboard). Imagine what some of the questions might be. Here is one such potential question one might ask:

Rotate a capital letter “D” 90 degrees counterclockwise and place it atop a capital letter “J.” What kind of weather does this make you think of?

A computer that could pass the Turing Test would be able to answer questions such as this and thus would make a human investigator believe that the computer was actually another human being. Turing thought that if a machine could do this, we should count that machine as having intelligence. The Chinese Room thought experiment is supposed to challenge Turing’s claim that something that can pass the Turing Test is thereby intelligent. The essence of a computer is that of a syntactic machine—a machine that takes symbols as inputs, manipulates symbols in accordance with a series of rules (the program), and gives the outputs that the rules dictate. Importantly, we can understand what syntactic machines do without having to say that they interpret or understand their inputs/outputs. In fact, a syntactic machine cannot possibly understand the symbols because there’s nothing there to understand. For example, in the case of modern-day computers, the symbols being processed are strings of 1s and 0s, which are physically instantiated in the CPU of a computer as a series of on/off voltages (that is, transistors). Note that a series of voltages are no more inherently meaningful than a series of different fluttering patterns of a flag waving in the wind, or a series of waves hitting a beach, or a series of footsteps on a busy New York City subway platform. They are merely physical patterns, nothing more, nothing less. What a computer does, in essence, is “reads” these inputs and gives outputs in accordance with the program. This simple theoretical (mathematical) device is called a “Turing machine,” after Alan Turing. A calculator is an example of a simple Turing machine. In contrast, a modern day computer is an example of what is called a “universal Turing machine”— universal because it can run any number of different programs that will allow it to compute all kinds of different outputs. In contrast, a simple calculator is only running a couple different simple programs—ones that correspond to the different kinds of mathematical functions the calculator has (+, −, ×, ÷). The Chinese room has all the essential parts of the computer and is functioning exactly as a computer does: he “reads” these symbols and produces outputs using symbols, in accordance with what the program dictates. If the program is sufficiently well written, then the man’s responses (the room’s output) will be able to convince someone outside the room that the room (or something inside it) understands Chinese.

But the whole point is that the there is nothing inside the room that understands Chinese. The man in the room doesn’t understand Chinese—they are just meaningless symbols to him. The written volumes don’t understand Chinese either—how could they?—books don’t understand things. Furthermore, Searle argues that the understanding of Chinese doesn’t just magically emerge from the combination of all the parts of the Chinese room: if no one of the parts of the room has any understanding of Chinese, then neither does the whole room. Thus, the Chinese room thought experiment is supposed to be a counterexample to the Turing Test: the Chinese room passes the Turing Test but the Chinese room doesn’t understand Chinese. Rather, it just acts as if it understands Chinese. Without understanding, there can be no thought. The Chinese room, impressive as it is for passing the Turing Test, lacks any understanding and therefore is not really thinking. Likewise, a computer cannot think because a computer is merely a syntactic machine that does not understand the inputs or the outputs. Rather, from the perspective of the computer, the strings of 1s and 0s are just meaningless symbols.[2] The people outside the Chinese room might ascribe thought and understanding of Chinese to the room, but there is neither thought nor understanding involved. Likewise, at some point in the future, someone may finally create a computer program that would pass the Turing Test[3] and we might think that machine has thought and understanding, but the Chinese room is supposed to show that we would be wrong to think this. No merely syntactic machine could ever think because no merely syntactic machine could ever understand. That is the point of the Chinese room thought experiment.

We could put this point in terms of the distinction between original vs. derived intentionality: no amount of derived intentionality will ever get you original intentionality. Computers have only derived intentionality and since genuine thought requires original intentionality, it follows that computers could never think. Here is a reconstructed version of the Chinese room argument:

- Computers are merely syntactic machines.

- Therefore, computers lack original intentionality (from 1)

- Thought requires original intentionality.

- Therefore, computers cannot think (from 2-3)

How should we assess the Chinese room argument? One thing to say is that it seems to make a lot of simplifying assumptions about his Chinese room. For example, the philosopher Daniel Dennett suggests that in order to pass the Turing Test a computer would need something on the order of 100 billion lines of code. That would take the man inside the room many lifetimes to hand simulate the code in the way that we are invited to imagine. Searle thinks that these practical kinds of considerations can be dismissed—for example, we can just imagine that the man inside the room can operate faster than the speed of light. Searle thinks that these kinds of assumptions are not problematic, for why should mere speed of operation make any difference to the theoretical point he is trying to make—which is that the merely syntactic processing of a digital computer could not achieve understanding? Dennett, on the other hand, thinks that such simplifying assumptions should alert us that there is something fishy going on with the Chinese room thought experiment. If we were really, truly imagining a computer program that could pass the Turing Test, Dennett thinks, then it wouldn’t sound nearly as absurd to say that the computer had thought.

There’s a deeper objection to the Chinese room argument. This response is sometimes referred to as the “other minds reply.” The essence of the Chinese room rebuttal of the Turing Test involves, so to speak, looking at the guts of what is going on inside of a computer. When you look at it “up close,” it certainly doesn’t seem like all of that syntactic processing adds up to intelligent thought. However, one can make exactly the same point about the human brain (something that Searle believes is undoubtedly capable of thought): the functioning of neurons, or even whole populations of neurons in neuronal spike trains, do not look like what we think of as intelligent thought. Far from it! But of course it doesn’t follow that human brains aren’t thinking! The problem is that in both cases we are looking at the wrong level of description. In order for us to be able to “see” the thought, we must be looking in the right place.

Zooming in and looking at the mechanics of the machines up close is not going to enable us to see the thought and intelligence. Rather, we have to zoom out to the level of behavior and observe the responses in their context. Thought isn’t something we can see up close; rather, thought is something that we attribute to something whose behavior is sufficiently intelligent. Dennett suggests the following cartoon as a reductio ad absurdum of the Chinese room argument:

In the cartoon, Dennett imagines someone going inside the Chinese room to see what is going on inside the room. Once inside they see the man responding to the questions of Chinese speakers outside the room. The woman tells the man (perhaps someone she knows), “I didn’t know you knew Chinese!” In response the man explains that he doesn’t and that he is just looking up the relevant strings Chinese characters to write in response to the inputs he receives. The woman’s interpretation of this is: “I see! You use your understanding of English in order to fake understanding Chinese!” The man’s response is: “What makes you think I understand English?” The joke is that the woman’s evidence for thinking that the man inside the room understands English is her evidence of his spoken behavior. This is exactly the same evidence that the Chinese speakers have of the Chinese room. So if the evidence is good enough for the woman inside the room to say that the man inside the room understands Chinese, why is the evidence of the Chinese speakers outside the room any different? We can make the problem even more acute. Suppose that we were to look inside the man inside the room’s brain. We would see all kinds of neural activity and then we could say, “Hey, this doesn’t look like thought; it’s just bunches of neurons sending chemical messages back and forth and those chemical signals have no inherent meaning.” Dennett’s point is that this response makes the same kind of mistake that Searle makes in supposing a computer can’t think: in both cases, we are focusing on the wrong level of detail. Neither the innards of the brain nor the innards of a computer looks like there’s thinking going on. Rather, thinking only emerges at the behavioral level; it only emerges when we are listening to what people are saying and, more generally, observing what they are doing. This is what is called the other minds reply to the Chinese room argument.

Interlude: Interpretationism and Representation

The other minds reply points us towards a radically different account of the nature of thought and representation. A common assumption in the philosophy of mind (and one that Searle also makes) is that thought (intentionality, representation) is something to be found within the inner workings of the thinking thing, whether we are talking about human minds or artificial minds. In contrast, on the account that Dennett defends, thought is not a phenomenon to be observed at the level of the inner workings of the machine. Rather, thought is something that we attribute to people in order to understand and predict their behaviors. To be sure, the brain is a complex mechanism that causes our intelligent behaviors (as well as our unintelligent ones), but to try to look inside the brain for some language-like representation system is to look in the wrong place. Representations aren’t something we will find in the brain, they are just something that we attribute to certain kinds of intelligent things (paradigmatically human beings) in order to better understand those beings and predict their behaviors. This view of the nature of representation is called interpretationism and can be seen as a kind of instrumentalism. Instrumentalists about representation believe that representations aren’t, in the end, real things.

Rather, they are useful fictions that we attribute in order to understand and predict certain behaviors. For example, if I am playing against the computer in a game of chess, I might explain the computer’s behavior by attributing certain thoughts to it such as, “The computer moved the pawn in front of the king because it thought that I would put the king in check with my bishop and it didn’t want to be in check.” I might also attribute thoughts to the computer in order to predict what it will do next: “Since the computer would rather lose its pawn than its rook, it will move the pawn in front of the king rather than the rook.” None of this requires that there be internal representations inside the computer that correspond to the linguistic representations we attribute. The fundamental insight about representation, according to interpretationism, is that just as we merely interpret computers as having internal representations (without being committed to the idea that they actually contain those representations internally), so too we merely interpret human beings as having internal representations (without being committed to whether or not they contain those internal representations). It is useful (for the purposes of explaining behavior) to interpret humans as having internal representations, even if they don’t actually have internal representations.

Interpretationist accounts of representation raise deep questions about where meaning and intentionality reside, if not in the brain, but we will not be able to broach those questions here. Suffice it to say that the disagreement between Searle and Dennett regarding Searle’s Chinese room thought experiment traces back to what I would argue is the most fundamental rift within the philosophy of mind: the rift between the Cartesian view of the mind, on the one hand, and the behaviorist tradition of the mind, on the other. Searle’s view of the mind, specifically his notion of “original intentionality,” traces back to a Cartesian view of the mind. On this view, the mind contains something special—something that cannot be capture merely by “matter in motion” or by any kind of physical mechanism. The mind is sui generis and is set apart from the rest of nature. For Searle, meaning and understand have to issue back to an “original” mean-er or understand-er. And that understand-er cannot be a mindless mechanism (which is why Searle thinks that computers can’t think). For Searle, like Descartes, thinking is reserved for a special (one might say, magical) kind of substance. Although Searle himself rejects Descartes’s conclusion that the mind is nonphysical, he retains the Cartesian idea that thinking is carried out by a special, quasi-magical kind of substance. Searle thinks that this substance is the brain, an object that he thinks contains special causal powers and that cannot be replicated or copied in any other kind of physical object (for example, an artificial brain made out of metal and silicon). Dennett’s behaviorist view of the mind sees the mind as nothing other than a complex physical mechanism that churns out intelligent behaviors that we then classify using a special mental vocabulary—the vocabulary of “minds,” “thoughts,” “representations,” and “intentionality.” The puzzle for Dennett’s behaviorist view is: How can there meaning and understanding without any original meaner/understander? How can there be only derived intentionality and no original intentionality?

Consciousness and the mind

Interpretationism sees the mind as a certain kind of useful fiction: we attribute representational states (thoughts) to people in virtue of their intelligent behavior and we do so in order to explain and predict their behavior. The causes of one’s intelligent behavior are real, but the representational states that we attribute need not map neatly onto any particular brain states. Thus, there need not be any particular brain state that represents the content, “Brittney Spears is a washed up pop star,” for example.

But there another aspect of our mental lives that seems more difficult to explain away in the way interpretationism explains away representation and intentionality. This aspect of our mind is first-person conscious experience. To borrow a term from Thomas Nagel, conscious experience refers to the “what it’s like” of our first person experience of the world. For example, I am sitting here at my table with a blue thermos filled with coffee. The coffee has a distinctive, qualitative smell which would be difficult to describe to someone who has never smelled it before. Likewise, the blue of the thermos has a distinctive visual quality—a “what it’s like”—that is different from what it’s like to see blue. These experiences—the smell of the coffee, the look of the blue—are aspects of my conscious experience and they have a distinctive qualitative dimension—there is something it’s like to smell coffee and to see blue. This qualitative character seems in some sense to be ineffable—that is, it would be very difficult if not impossible to convey what it is like to someone who had never smelled coffee or to someone who had never seen the color blue. Imagine someone who was colorblind. How would you explain what blue was to them? Sure, you could tell them that it was the color of the ocean, but that would not convey to them the particular quality that you (someone who is not color blind) experience when you look at a brilliant blue ocean or lake. Philosophers have coined a term that they use to refer to the qualitative aspects of our conscious experience: qualia. It seems that our conscious experience is real and cannot be explained away in the way that representation can. Maybe there needn’t be anything similar to sentences in my brain, but how could there not be colors, smells, feels? The feeling of stubbing your toe and the feeling of an orgasm are very different feels (thank goodness), but it seems that they are both very much real things. That is, if neuroscientists were to be able to explain exactly how your brain causes you to respond to stubbing your toe, such an explanation would seem to leave something out if it neglected the feeling of the pain. From our first person perspective, our experiences seem to be the most real thing there are, so it doesn’t seem that we could explain their reality away.

Physicalists need not disagree that conscious experiences are real; they would simply claim that they are ultimately just physical states of our brain. Although that might seem to be a plausible position, there are well known problems with claiming that conscious experiences are nothing other than physical states of our brain. The problem is that it does not seem that our conscious experience could just reduce to brain states—that is, to our neurons in our brain sending lots and lots of chemical messages back and forth simultaneously. The 17th century philosopher Gottfried Wilhelm Leibniz (1646-1716) was no brain scientist (that would take another 250 to develop) but he put forward a famous objection to the idea that consciousness could be reduced to any kind of mechanism (and the brain is one giant, complex mechanism). Leibniz’s objection is sometimes referred to as “Leibniz’s mill.” In 1714, Leibniz wrote:

Moreover, we must confess that perception, and what depends on it, is inexplicable in terms of mechanical reasons, that is, through shapes and motions. If we imagine that there is a machine whose structure makes it think, sense, and have perceptions, we could conceive it enlarged, keeping the same proportions, so that we could enter into it, as one enters into a mill. Assuming that, when inspecting its interior, we will only find parts that push one another, and we will never find anything to explain a perception (Monadology, section 17).

Leibniz uses a famous form of argument here called reductio ad absurdum: He assumes for the sake of the argument that thinking is a mechanical process and then shows how that leads to the conclusion that thinking cannot be a mechanical process.We could put Leibniz’s exact same point into the language of 21st century neuroscience: imagine that you could enlarge the size of the brain (in a sense, we can already do with the help of the tools of modern neuroscience). If we were to enter into the brain (perhaps by shrinking ourselves down) we would see all kinds of physical processes going on (billions of neurons sending chemical signals back and forth). However, to observe all of these processes would not be to observe the conscious experiences of the person whose brain we were observing. That means that conscious experiences cannot reduce to physical brain mechanics. The simple point being made is that in conscious experience there exist all kinds of qualitative properties (qualia)—red, blue, the smell of coffee, the feeling of getting your back scratched—but none of these properties would be the properties observed in observing someone’s brain. All you will find on the inside is “parts that push one another” and never the properties that appear to us in first-person conscious experience.

The philosopher David Chalmers has coined a term for the problem that Leibniz was getting at. He calls it the hard problem of consciousness and contrasts it with easy problems of consciousness. The “easy” problems of mind science involve questions about how the brain carries out functions that enable certain kinds of behaviors—functions such as discriminating stimuli, integrating information, and using the information to control behavior. These problems are far from easy in any normal sense—in fact, they are some of the most difficult problems of science. Consider, for example, how speech production occurs. How is it that I decide what exactly to say in response to a criticism someone has just made of me? The physical processes involved are numerous and include the sounds waves of the person’s question hitting my eardrum, those physical signals being carried to the brain, that information being integrated with the rest of my knowledge and, eventually, my motor cortex sending certain signals to my vocal chords that then produce the sounds, “I think you’re misunderstanding what I mean when I said…” or whatever I end up saying. We are still a long way from understanding how this process works, but it seems like the kind of problem that can be solved by doing more of the same kinds of science that we’ve been doing. In short, solving easy problems involves understanding the complex causal mechanisms of the brain. In contrast, the hard problem is the problem of explaining how physical processes in the brain give rise to first- person conscious experience. The hard problem does not seem to be the kind of problem that could be solved by simply investigating in more detail the complex causal mechanism that is the brain. Rather, it seems to be a conceptual problem: how could it be that the colors, and sounds, the smells that constitute our first-person conscious experience of the world are nothing other than neurons firing electrical-chemical signals back and forth? As Leibniz pointed out over 250 years ago, the one seems to be a radically different kind of thing than the other.

In fact, it seems that a human being could have all of the functioning of normal human being and yet lack any conscious experience. There is a term for such a being: a philosophical zombie. Philosophical zombies are by definition being that are functionally indistinguishable from you or I but who lack any conscious experience. If we assume that it’s the functioning of the brain that causes all of our intelligent behaviors, then it isn’t clear what conscious experience could possibly add to our repertoire of intelligent behaviors. Philosophical zombies can help illustrate the hard problem of consciousness since if such creatures are theoretically possible then consciousness doesn’t seem to reduce to any kind of brain functioning. By hypothesis the brain of the normal human being and the brain of the philosophical zombie are identical. It’s just that the latter lacks consciousness whereas the former doesn’t. If this is possible then it does indeed seems to make consciousness seem like quite a mysterious thing for the physicalist.

There are two other famous thought experiments that illustrate the hard problem of consciousness: Frank Jackson’s knowledge argument and Thomas Nagel’s what it’s like to be a bat argument.

Nagel’s argument against physicalism turns on a colorful example: Could we (human beings) imagine what it would be like to be a bat? Although bats are still mammals, and thus not so different than human beings phylogenetically, their experience would seem to be radically different than ours. Bats echolocate around in the darkness, they eat bugs at night, and they sleep while hanging upside down. Human beings could try to do all these things, but even if they did, they would arguably not be experiencing these activities like a bat does. And yet it seems pretty clear that bats (being mammals) have some kind of subjective experience of the world—a “what it’s like” to be a bat. The problem is that although we can figure out all kinds of physical facts about bats—how they echolocate, how they catch insects in the dark, and so on—we cannot ever know what it’s like to be a bat. For example, although we could understand enough scientifically to be able to send signals to the bat that would trick it into trying to land on what it perceived as a ledge, we could not know what it’s like for the bat to perceive an object as a ledge. That is, we could understand the causal mechanisms that make the bat do what the bat does, but that would not help us to answer the question of what it’s like to experience the world the way a bat experiences the world. Nagel notes that it is characteristic of science to study physical facts (such as how the brain works) that can be understood in a third-person kind of way. That is, anyone with the relevant training can understand a scientific fact. If you studied the physics of echolocation and also a lot of neuroscience of bat brains, you would be able to understand how a bat does what a bat does. But this understanding would seem to bring you no closer to what it’s like to be a bat—that is, to the first-person perspective of the bat. We can refer to the facts revealed in first-person conscious experience as phenomenal facts. Phenomenal facts are things like what it’s like to see blue or smell coffee or experience sexual pleasure…or echolocate around the world in total darkness. Phenomenal facts are qualia, to use our earlier term. Nagel’s point is that if the phenomenal facts of conscious experience are only accessible from a first-person perspective and scientific facts are always third-person, then it follows that phenomenal facts cannot be grasped scientifically. Here is a reconstruction of Nagel’s argument:

- The phenomenal facts presented in conscious experience are knowable only from the first-person (subjective) perspective.

- Physical facts can always be known from third-person (objective) perspective.

- Nothing that is knowable only from the first person perspective could be the same as (reduce to) something that is knowable from the third-person perspective.

- Therefore, the phenomenal facts of conscious experience are not the same as physical facts about the brain. (from 1-3)

- Therefore, physicalism is false. (from 4)

Nagel uses an interesting analogy to explain what’s wrong with physicalism—the claim that conscious states are nothing other than brain states. He imagines an ancient Greek saying that “matter is energy.” It turns out that this statement is true (Einstein’s famous E = mc2) but an ancient Greek person could not have possibly understood how it could be true. The problem is that the ancient Greek person could not have had the conceptual resources needed for being able to understand what this statements means. Nagel claims that we are in the same position today when we say something like “conscious states are brain states” is true. It might be true, we just cannot understand what that could possibly mean yet because we don’t have the conceptual resources for understanding how this could be true. And the conceptual problem is what Nagel is trying to make clear in the above argument. This is another way at getting at the hard problem of consciousness.

Frank Jackson’s famous knowledge argument is similar and makes a similar point. Jackson imagines a super scientist, whom he dubs “Mary,” knows all the physical facts about color vision. Not only is she the world’s expert on color vision, she knows all there is to know about color vision. She can explain how certain wavelengths of light strike the cones in the retina and send signals via the optic nerve to the brain. She understands how the brain interprets these signals and eventually communicates with the motor cortex that sends signals to produce speech such as, “that rose is a brilliant color of red.” Mary understands all the causal processes of the brain that are connected to color vision. However, Mary understands this without ever having experienced any color. Jackson imagines that this is because she has been kept in a black and white room and has only ever had access to black and white things. So the books she reads and the things she investigates of the outside world (via a black and white monitor in her black and white room) are only ever black and white, never any other color. Now what will happen when Mary is released from the room and sees color for the first time? Suppose she is released and sees a red rose. What will she say? Jackson’s claim was that Mary will be surprised because she will learn something new: she will learn what it’s like to see red. But by hypothesis, Mary already knew all the physical facts of color vision. Thus, it follows that this new phenomenal fact that Mary learns (specifically, what it’s like to see red) is not the same as the physical facts about the brain (which by hypothesis she already knows).

- Mary knows all the physical facts about color vision.

- When Mary is released from the room and sees red for the first time, she learns something new—the phenomenal fact of what it’s like to see red.

- Therefore, phenomenal facts are not physical facts. (from 1-2)

- Therefore, physicalism is false. (from 3)

The upshot of both Nagel and Jackson’s arguments is that the phenomenal facts of conscious experience—qualia—are not reducible to brain states. This is the hard problem of consciousness and it is the mind-body problem that arises in particular for physicalism. The hard problem is the reason why physicalists can’t simply claim a victory over dualism by invoking Ockham’s razor. Ockham’s razor assumes that the two competing explanations equally explain all the facts but that one does so in a simpler way than the other. The problem is that if physicalism cannot explain the nature of consciousness—in particular, how brain states give rise to conscious experience—then there is something that physicalism cannot explain and, therefore, physicalists cannot so simply invoke Ockham’s razor.

Two responses to the hard problem

We will consider two contemporary responses to the hard problem: David Chalmers’s panpsychism and Daniel Dennett’s eliminativism. Although both Chalmers and Dennett exist within a tradition of philosophy that privileges scientific explanation and is broadly physicalist, they have two radically different ways of addressing the hard problem. Chalmers’s response accepts that consciousness is real and that solving the hard problem will require quite a radical change in how we conceptualize the world. On the other hand, Dennett’s response attempts to argue that the hard problem isn’t really a problem because it rests on a misunderstanding of the nature of consciousness. For Dennett, consciousness is a kind of illusion and isn’t ultimately real, whereas for Chalmers consciousness is the most real thing we know. The disagreement between these two philosophers returns as, again, to the most fundamental divide within the philosophy of mind: that between Cartesians, on the one hand, and behaviorists, on the other.

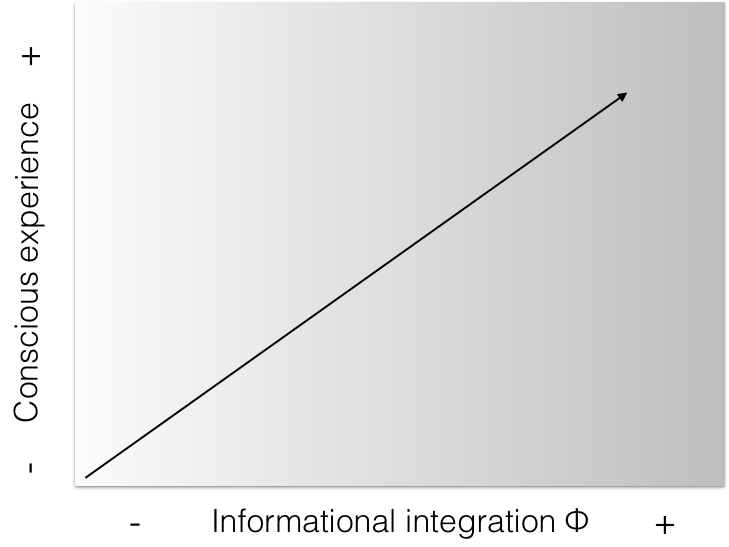

To understand Chalmers’s response to the hard problem, we must first understand what he means by a “basic entity.” A basic entity is one that science posits but that cannot be further analyzed in terms of any other kind of entity. Can you think of what kinds of entities would fit this description? Or which science you would look to in order to find basic entities? If you’re thinking physics, then you’re correct. Think of an atom. Originally, atoms were thought of as the most basic building blocks of the universe; the term “atom” literally means “uncuttable” (from the Greek “a” = not + “tomos” = cut). So atoms were originally thought of as basic entities because there was nothing smaller. As we now know, this turned out to be incorrect because there were even smaller particles such as electrons, protons, quarks, and so on. But eventually physics will discover those basic entities that cannot be reduced to anything further. Mental states are not typically thought of as basic entities because they are studied by a higher order science—psychology and neuroscience. So mental states, such as my perception of the red rose, are not basic entities. For example, brain states are ultimately analyzable in terms of brain chemistry and chemistry, in turn, is ultimately analyzable in terms of physics (not that anyone would care to carry out that analysis!). But Chalmers’s radical claim is that consciousness is a basic entity. That is, the qualia—what it’s like to see red, smell coffee, and so on—that constitute our first-person conscious experience of the world cannot be further analyzed in terms of any other thing. They are what they are and nothing else. This doesn’t mean that our conscious experiences don’t correlate with the existence of certain brain states, according to Chalmers. Perhaps my experience of the smell of coffee correlates with a certain kind of brain state. But Chalmers’s point is that that correlation is basic; the coffee smell qualia are not the same thing as the brain state with which they might be correlated. Rather, the brain state and the conscious experience are just two radically different things that happen to be correlated. Whereas brain states reduce to further, more basic, entities, conscious states don’t. As Chalmers sees it, the science of consciousness should proceed by studying these correlations. We might discover all kinds of things about the nature of consciousness by treating the science of consciousness as irreducibly correlational. Chalmers suggests as an orienting principle the idea that consciousness emerges as a function of the “informational integration” of an organism (including artificially intelligent “organisms”). What is informational integration? In short, informational integration refers to the complexity of the organism’s control mechanism—its “brain.” Simple organisms have very few inputs from the environment and their “brains” manipulate that information in fairly simple ways. Take an ant, for example. We pretty much understand exactly how ants work and as far as animals go, they are pretty simple. We can basically already duplicate the level of intelligence of an ant with machines that we can build. So an informational integration of an ant’s brain is pretty low. A thermostat has some level of informational integration, too. For example, it takes in information about the ambient temperature of a room and then sends a signal to either turn the furnace on or off depending on the temperature reading. That is a very simple behavior and the informational integration inside the “brain” of a thermostat is very simple. Chalmers’s idea is that complex consciousness like our emerges when the informational integration is high—that is, when we are dealing with a very complex brain. The less complex the brain, the less rich the conscious experience. Here is a law that Chalmers suggests could orient the scientific study of consciousness:

This graph just says that as informational integration increases, so does the complexity of the associated conscious experience. Again, the conscious experience doesn’t reduce to informational integration, since that would only run headlong into the hard problem—a problem that Chalmers thinks is unsolvable.

The graph also says something else. As drawn, it looks like even information processing systems whose informational integration is low (for example, a thermostat or tree) also has some non-negligible level of conscious experience. That is a strange idea; no one really thinks that a thermostat is conscious and the idea that plants might have some level of conscious experience will seem strange to most. This idea is sometimes referred to as panpsychism (“pan” = all, “psyche” = mind)—there is “mind” distributed throughout everything in the world. Panpsychism is a radical departure from traditional Western views of the mind, which sees minds as the purview of animals and, on some views, of human beings alone. Chalmers’s panpsychism still draws a line between objects that process information (things like thermostats, sunflowers, and so on) and those that don’t (such as rocks), but it is still quite a radical departure from traditional Western views. It is not, however, a radical departure from all sorts of older, prescientific and indigenous views of the natural world according to which everything in the natural world, including plants and streams, as possessing some sort of spirit—a mind of some sort. In any case, Chalmers thinks that there are other interpretations of his view that don’t require the move to panpsychism. For example, perhaps conscious experience only emerges once information processing reaches a certain level of complexity. This interpretation would be more consistent with traditional Western views of the mind in the sense that one could specify that only organisms with a very complex information processing system, such as the human brain, possess conscious experience. (Graphically, based on the above graph, this would mean the lowest level of conscious experience wouldn’t start until much higher up the y-axis.)

Daniel Dennett’s response to the hard problem fundamentally differs from Chalmers’s. Whereas Chalmers posits qualia as real aspects of our conscious experience, Dennett attempts to deny that qualia exist. Rather, Dennett thinks that consciousness is a kind of illusion foisted upon us by our brain. Dennett’s perennial favorite example to begin to illustrate the illusion of consciousness concerns our visual field. From our perspective, the world presented to us visually looks to be unified in color and not possessing any “holes.” However, we know that this is not actually the case. The cones in the retina do not exist on the periphery and, as a result, you are not actually seeing colors in the objects at the periphery of your visual field. (You can test this by having someone hold up a new object on one side of your visual field and moving it back and forth until you are able to see the motion. Then try to guess the color of the object. Although you’ll be able to see the object’s motion, you won’t have a clue as to its color, if you do it correctly.) Although it seems to us as if there is a visual field that is wholly colored, it isn’t really that way. This is the illusion of consciousness that Dennett is trying to get us to acknowledge; things are not really as they appear. There’s another aspect of this illusion of our visual field: our blind spot. The location where the optic nerve exits the retina does not convey any visual information since there are no photoreceptors; this is known as the blind spot. There are all kinds of illustrations to reveal your blind spot. However, the important point that Dennett wants to make is that from our first-person conscious experience it never appears that there is any gap in our picture of the world. And yet we know that there is. This again is an illustration of what Dennett means by the illusion of conscious experience. Dennett does more than simply give fun examples that illustrate the strangeness of consciousness; he has also famously attacked the idea that there are qualia. Recall that qualia are the purely qualitative aspects of our conscious experiences—for example, the smell of coffee, the feeling of a painful sunburn (as opposed to the pain of a headache), or the feeling of an orgasm. Qualia are what are supposed to create problems for the physicalist since it doesn’t seem that that purely qualitative feels could be nothing more than the buzzing of neurons in the brain. Since qualia are what create the trouble for the physicalism and since Dennett is a physicalist, one can understand why Dennett targets qualia and tries to convince us that they don’t exist.

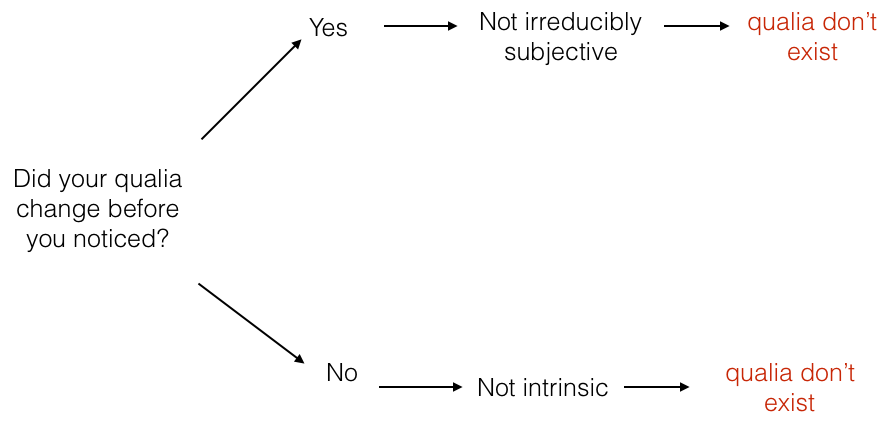

If you’re going to argue against something’s existence, the best way to do that is first precisely define what it is you are trying to deny. Then you argue that as defined such things cannot exist. This is exactly what Dennett does with qualia.[4] He defines qualia as the qualitative aspects of our first-person conscious experience that are a) irreducibly first-person (meaning that they are inaccessible to third-person, objective investigation) and b) intrinsic properties of one’s conscious experience (meaning that they are what they are independent of anything else). Dennett argues that these two properties (irreducibly first person and intrinsic) are in tension with each other—that is, there can’t be an entity which possesses both of these properties. But since both of these properties are part of the definition of qualia, it follows that qualia can’t exist—they’re like a square circle.

Change blindness is a widely studied phenomenon in cognitive psychology. Some of the demonstrations of it are quite amazing and have made it into the popular media many times over the last couple of decades. One of the most popular research paradigms to study change blindness is called the flicker paradigm. In the flicked paradigm, two images that are the same with the exception of some fairly obvious difference are exchanged in a fairly rapid succession, with a “mask” (black or white screen) between them. What is surprising is that it is very difficult to see even fairly large differences between the two images. So let’s suppose that you are viewing these flickering images and trying to figure out what the difference between them is but that you haven’t yet figured it out yet. As Dennett notes, there are of course all kinds of changes going on in your brain as these images flicker. For example, the photoreceptors are changing with the changing images. In the case of a patch of color that is changing between the two images, the cones in your retina are conveying different information for each image. Dennett asks: “Before you noticed the changing color, were your color qualia changing for that region?” The problem is that any way you answer this question spells defeat for the defender of qualia because either they have to give up (a) their irreducible subjectiveness or their intrinsicness (b). So suppose the answer to Dennett’s question is that your qualia are changing. In that case, you do not have any special or privileged access to your qualia, in which case they aren’t irreducibly subjective, since subjective phenomena are by definition something we alone have access to. So it seems that the defender of qualia should reject this answer. Then suppose, on the other hand, that your qualia aren’t changing. In that case, your qualia can’t change unless you notice them changing. But that makes it looks like qualia aren’t really intrinsic, after all since their reality is constituted by whether you notice them or not. And “noticings” are relational properties, not intrinsic properties. Furthermore, Dennett notes that if the existence of qualia depend on one’s ability to notice or report them, then even philosophical zombies would have qualia, since noticings/reports are behavioral or functional properties and philosophical zombies would have these by definition. So it seems that the qualia defender should reject this answer as well. But in that case, there’s no plausible answer that the qualia defender can give to Dennett’s question. Dennett’s argument has the form of a classic dilemma, as illustrated below:

Dennett thinks that the reason there is no good answer to the question is that the concept of qualia is actually deeply confused and should be rejected. But if we reject the existence qualia it seems that we reject the existence of the thing that was supposed to have caused problems for physicalism in the first place. Qualia are a kind of illusion and once we realize this, the only task will be to explain why we have this illusion rather than trying to accommodate them in our metaphysical view of the world. The latter is Chalmers’s approach whereas the former is Dennett’s.

Study questions

- True or false: One popular way of thinking about how the mind works is by analogy with how a computer works: the brain is a complex syntactic engine that uses its own kind of language—a language that has original intentionality.

- True or false: One good way of explaining how the mind understands things is to posit a little man inside the head that does the understanding.

- True or false: The mind-body problem is the same, exact problem for both physicalism and dualism.

- True or false: John Searle agrees with Alan Turing that the relevant test for whether a machine can think is the test of whether or not the machine behaves in a way that convinces us it is intelligent.

- True or false: One good reply to the Chinese Room argument is just to note that we have exactly the same behavioral evidence that other people have minds as we would of a machine that passed the Turing Test.

- True or false: According to interpretationism, mental representations are things we attribute to others in order to help us predict and explain their behaviors, and therefore it follows that mental representations must be real.

- True or false: This chapter considers two different aspects of our mental lives: mental representation (or intentionality) and consciousness. But the two really reduce to the exact same philosophical problem of mind.

- True or false: The hard problem is the problem of understanding how the brain causes intelligent behavior.

- True of false: The knowledge argument is an argument against physicalism.

- True or false: Dennett’s solution to the hard problem turns out to be the same as Chalmers’s solution.

For deeper thought

- How does the hard problem differ from the easy problems of brain science?

- If the Turing Test isn’t the best test for determining whether a machine is thinking, can you think of a better test?

- According to physics, nothing in the world is really red in the way we perceive it. Rather, redness is just a certain wavelength of light that our senses interpret in a particular way (some other creature’s sensory system might interpret that same physical phenomenon in a very different way). By the same token, redness does not exist in the brain: if you are seeing red then I cannot also see the red by looking at your brain. In this case, where is the redness if it isn’t in the world and it also isn’t in the brain? And does this prove that redness is not a physical thing, thus vindicating dualism? Why or why not?

- Could someone be in pain and yet not know it? If so, how would we be able to tell they were in pain? If not, then aren’t pain qualia real? And so wouldn’t that prove that qualia are real (if pain is)?

- According to Chalmers’s view, is it theoretically possible for a machine to be conscious? Why or why not?

- Readers who are familiar with the metaphysics of minds will notice that I have left out an important option: monism, the idea that there is ultimately only one kind of thing in the world and thus the mental and the physical do not fundamentally differ. Physicalism is one version of monism, but there are many others. Bishop George Berkeley’s idealism is a kind of monism as is the panpsychism of Leibniz and Spinoza. I have chosen to focus on physicalism for pedagogical reasons, because of its prominence in contemporary philosophy of mind, because of its intuitive plausibility to those living in an age of neuroscience, and because the nuances of the arguments for monism are beyond the scope of this introductory treatment of the problem. ↵

- We could actually retell the Chinese room thought experiment in such a way that what the man inside the room was manipulating was strings of 1s and 0s (what is called “binary code”). The point remains the same in either case: whether the program is defined over Chinese characters or strings of 1s and 0s, from the perspective of the room, none of it has any meaning and there’s no understanding required in giving the appropriate outputs. ↵

- Nothing has yet, claims to the contrary notwithstanding. ↵

- Daniel Dennett, Sweet Dreams: Philosophical Obstacles to a Science of Consciousness. MIT Press. 2006. ↵